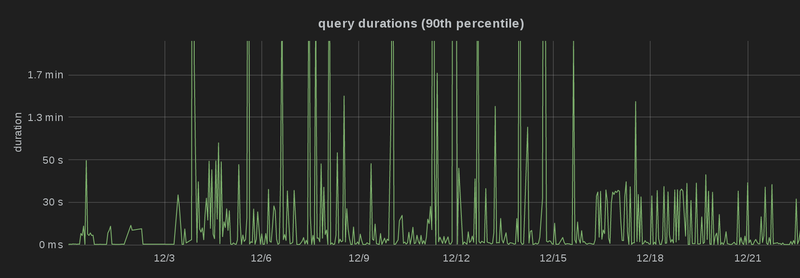

Munin is a great tool. If you can script it, you can monitor it with

munin. Unfortunately, however, munin is slow; that is, it will take

snapshots once every five minutes, and not look at systems in between.

If you have a short load spike that takes just a few seconds, chances

are pretty high munin missed it. It also comes with a great

webinterfacefrontendthing that allows you to dig deep in the history of

what you've been monitoring.

By the time munin tells you that your Kerberos KDCs are all down, you've

probably had each of your users call you several times to tell you that

they can't log in. You could use nagios or one of its brethren, but it

takes about a minute before such tools will notice these things, too.

Maybe use CollectD then? Rather than check once every several minutes,

CollectD will collect information every few

seconds. Unfortunately,

however, due to the performance requirements to accomplish that (without

causing undue server load), writing scripts for CollectD is not as easy

as it is for Munin. In addition, webinterfacefrontendthings aren't

really part of the CollectD code (there are several, but most that I've

looked at are lacking in some respect), so usually if you're using

CollectD, you're missing out some.

And collectd doesn't do the nagios thing of actually

telling you when

things go down.

So what if you could see it when things go bad?

At one customer, I came in contact with

Frank, who

wrote

ExtreMon, an amazing tool that allows you

to

visualize the CollectD output

as things are happening, in a

full-screen fully customizable visualization of the data. The problem is

that ExtreMon is rather... complex to set up. When I tried to talk Frank

into helping me getting things set up for myself so I could play with

it, I got a reply along the lines of...

well, extremon requires a lot of work right now... I really want to

fix foo and bar and quux before I start documenting things. Oh, and

there's also that part which is a dead end, really. Ask me in a few

months?

which is fair enough (I can't argue with some things being suboptimal),

but the code

exists, and (as I can see every day at $CUSTOMER)

actually

works. So I decided to just figure it out by myself. After

all, it's free software, so if it doesn't work I can just read the

censored code.

As the

manual

explains, ExtreMon is a plugin-based system; plugins can add information

to the "coven", read information from it, or both. A typical setup will

run several of them; e.g., you'd have the

from_collectd plugin (which

parses the binary network protocol used by collectd) to get raw data

into the coven; you'd run several aggregator plugins (which take that

raw data and interpret it, allowing you do express things along the

lines of "if the system's load gets above X, set

load.status to

warning"; and you'd run at least one output plugin so that you can

actually

see the damn data somewhere.

While setting up ExtreMon as is isn't as easy as one would like, I did

manage to get it to work. Here's what I had to do.

You will need:

- A monitor with a FullHD (or better) resolution. Currently, the display

frontend of ExtreMon assumes it has a FullHD display at all time.

Even if you have a lower resolution. Or a higher one.

- Python3

- OpenJDK 6 (or better)

First, we clone the ExtreMon git repository:

git clone https://github.com/m4rienf/ExtreMon.git extremon

cd extremon

There's a README there which explains the bare necessities on getting

the

coven to work. Read it. Do what it says. It's not wrong. It's not

entirely complete, though; it fails to mention that you need to

- install CollectD (which is required for its types.db)

- Configure CollectD to have a line like

Hostname "com.example.myhost"

rather than the (usual) FQDNLookup true. This is because extremon

uses the java-style reverse hostname, rather than the internet-style

FQDN.

Make sure the

dump.py script outputs something from collectd. You'll

know when it shows something not containing "plugin" or "plugins" in the

name. If it doesn't, fiddle with the

#x3. lines at the top of the

from_collectd file until it does. Note that ExtreMon uses inotify to

detect whether a plugin has been added to or modified in its

plugins

directory; so you don't need to do anything special when updating

things.

Next, we build the java libraries (which we'll need for the display

thing later on):

cd java/extremon

mvn install

cd ../client/

mvn install

This will download half the Internet, build some java sources, and drop

the precompiled

.jar files in your

$HOME/.m2/repository.

We'll now build the display frontend. This is maintained in a separate

repository:

cd ../..

git clone https://github.com/m4rienf/ExtreMon-Display.git display

cd display

mvn install

This will download the other half of the Internet, and then fail,

because Frank forgot to add a few repositories. Patch (and push request)

on

github

With that patch, it will build, but things will still fail when trying

to sign a .jar file. I know of four ways on how to fix that particular

problem:

- Add your passphrase for your java keystore, in cleartext, to the

pom.xml file. This is a terrible idea.

- Pass your passphrase to maven, in cleartext, by using some command

line flags. This is not much better.

- Ensure you use the maven-jarsigner-plugin 1.3.something or above, and

figure out how the maven encrypted passphrase store thing works. I

failed at that.

- Give up on trying to have maven sign your jar file, and do it

manually. It's not that hard, after all.

If you're going with 1 through 3, you're on your own. For the last

option, however, here's what you do. First, you need a key:

keytool -genkeypair -alias extremontest

after you enter all the information that

keytool will ask for, it will

generate a self-signed code signing certificate, valid for six months,

called

extremontest. Producing a code signing certificate with longer

validity and/or one which is signed by an actual CA is left as an

exercise to the reader.

Now, we will sign the

.jar file:

jarsigner target/extremon-console-1.0-SNAPSHOT.jar extremontest

There. Who needs help from the internet to sign a .jar file? Well, apart

from this blog post, of course.

You will now want to copy your freshly-signed

.jar file to a location

served by HTTPS. Yes, HTTPS, not HTTP; ExtreMon-Display will fail on

plain HTTP sites.

Download

this SVG file,

and open it in an editor. Find all references to

be.grep as well as

those to

barbershop and replace them with your own prefix and

hostname. Store it along with the

.jar file in a useful directory.

Download

this JNLP file,

and store it on the same location (or you might want to actually open it

with "javaws" to see the very basic animated idleness of my system).

Open it in an editor, and replace any references to barbershop.grep.be

by the location where you've stored your signed

.jar file.

Add the

chalice_in_http plugin from the

plugins directory. Make sure

to configure it correctly (by way of its first few comment lines) so

that its input and output filters are set up right.

Add the configuration snippet in section 2.1.3 of the manual (or

something functionally equivalent) to your webserver's configuration.

Make sure to have authentication

chalice_in_http is an input

mechanism.

Add the

chalice_out_http plugin from the

plugins directory. Make

sure to configure it correctly (by way of its first few comment lines)

so that its input and output filters are set up right.

Add the configuration snippet in section 2.2.1 of the manual (or

something functionally equivalent) to your webserver's configuration.

Authentication isn't strictly required for the output plugin, but you

might wish for it anyway if you care whether the whole internet can see

your monitoring.

Now run

javaws https://url/x3console.jnlp to start Extremon-Display.

At this point, I got stuck for several hours. Whenever I tried to run

x3mon, this java webstart thing would tell me simply that things failed.

When clicking on the "Details" button, I would find an error message

along the lines of "Could not connect (name must not be null)". It would

appear that the Java people believe this to be a proper error message

for a fairly large number of constraints, all of which are slightly

related to TLS connectivity. No, it's not the keystore. No, it's not an

API issue, either. Or any of the loads of other rabbit holes that I dug

myself in.

Instead, you should simply make sure you have

Server Name Indication enabled. If you don't, the defaults in Java will cause it to

refuse to even

try to talk to your webserver.

The ExtreMon github repository comes with a bunch of extra plugins; some

are special-case for the place where I first learned about it (and

should therefore probably be considered "examples"), others are

general-purpose plugins which implement things like "is the system

load within reasonable limits". Be sure to check them out.

Note also that while you'll probably be getting most of your data from

CollectD, you don't actually

need to do that; you can write your own

plugins, completely bypassing collectd. Indeed, the

from_collectd

thing we talked about earlier is, simply, also a plugin. At $CUSTOMER,

for instance, we have one plugin which simply downloads a file every so

often and checks it against a checksum, to verify that a particular

piece of

nonlinear

software hasn't gone astray yet again. That doesn't need collectd.

The example above will get you a small white bar, the width of which is

defined by the cpu "idle" statistic, as reported by CollectD. You

probably want more. The manual (chapter 4, specifically) explains how to

do that.

Unfortunately, in order for things to work right, you need to pretty

much manually create an SVG file with a fairly strict structure. This is

the one thing which Frank tells me is a dead end and needs to be pretty

much rewritten. If you don't feel like spending several days manually

drawing a schematic representation of your network, you probably want to

wait until Frank's finished. If you don't mind, or if you're like me and

you're impatient, you'll be happy to know that you

can use inkscape to

make the SVG file. You'll just have to use dialog behind ctrl+shift+X. A

lot.

Once you've done that though, you can see when your server is down.

Like, now.

Before your customers call you.

I have released whatmaps 0.0.9 a tool to check which processes

map shared objects of a certain package. It can integrate into apt to

automatically restart services after a security upgrade.

This release fixes the integration with recent systemd (as in Debian

Jessie), makes logging more consistent and eases integration into

downstream distributions. It's available in Debian Sid and Jessie and

will show up in Wheezy-backports soon.

This blog is flattr enabled.

I have released whatmaps 0.0.9 a tool to check which processes

map shared objects of a certain package. It can integrate into apt to

automatically restart services after a security upgrade.

This release fixes the integration with recent systemd (as in Debian

Jessie), makes logging more consistent and eases integration into

downstream distributions. It's available in Debian Sid and Jessie and

will show up in Wheezy-backports soon.

This blog is flattr enabled.

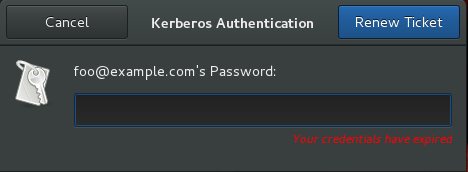

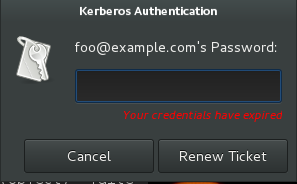

This makes krb5-auth-dialog better ingtegrated into other desktops

again thanks to

This makes krb5-auth-dialog better ingtegrated into other desktops

again thanks to

Munin is a great tool. If you can script it, you can monitor it with

munin. Unfortunately, however, munin is slow; that is, it will take

snapshots once every five minutes, and not look at systems in between.

If you have a short load spike that takes just a few seconds, chances

are pretty high munin missed it. It also comes with a great

webinterfacefrontendthing that allows you to dig deep in the history of

what you've been monitoring.

By the time munin tells you that your Kerberos KDCs are all down, you've

probably had each of your users call you several times to tell you that

they can't log in. You could use nagios or one of its brethren, but it

takes about a minute before such tools will notice these things, too.

Maybe use CollectD then? Rather than check once every several minutes,

CollectD will collect information every few seconds. Unfortunately,

however, due to the performance requirements to accomplish that (without

causing undue server load), writing scripts for CollectD is not as easy

as it is for Munin. In addition, webinterfacefrontendthings aren't

really part of the CollectD code (there are several, but most that I've

looked at are lacking in some respect), so usually if you're using

CollectD, you're missing out some.

And collectd doesn't do the nagios thing of actually telling you when

things go down.

So what if you could see it when things go bad?

At one customer, I came in contact with

Munin is a great tool. If you can script it, you can monitor it with

munin. Unfortunately, however, munin is slow; that is, it will take

snapshots once every five minutes, and not look at systems in between.

If you have a short load spike that takes just a few seconds, chances

are pretty high munin missed it. It also comes with a great

webinterfacefrontendthing that allows you to dig deep in the history of

what you've been monitoring.

By the time munin tells you that your Kerberos KDCs are all down, you've

probably had each of your users call you several times to tell you that

they can't log in. You could use nagios or one of its brethren, but it

takes about a minute before such tools will notice these things, too.

Maybe use CollectD then? Rather than check once every several minutes,

CollectD will collect information every few seconds. Unfortunately,

however, due to the performance requirements to accomplish that (without

causing undue server load), writing scripts for CollectD is not as easy

as it is for Munin. In addition, webinterfacefrontendthings aren't

really part of the CollectD code (there are several, but most that I've

looked at are lacking in some respect), so usually if you're using

CollectD, you're missing out some.

And collectd doesn't do the nagios thing of actually telling you when

things go down.

So what if you could see it when things go bad?

At one customer, I came in contact with

Today was a really good day, right up until the end, when it wasn't so good,

but could have been a whole lot worse, so I'm grateful for that.

I've been wanting to

Today was a really good day, right up until the end, when it wasn't so good,

but could have been a whole lot worse, so I'm grateful for that.

I've been wanting to  Last time I blogged about my running activities was after DebConf 13

in Switzerland, back in August.

At that time, I just completed two great moutain races in one month

(Mont-Blanc Marathon, then EDF Cenis Tour, one being 42km and 2500m

positive climb and the other one being 50km and 2700m). EDF Cenis Tour

was my best result overall in a trail race, being ranked 40th out of

more than 300 runners and 3rd in my age category (men 50-59).

So, in late August, I was preparing for my "autumn challenge", a

succession of 3 long distance races in a row:

Last time I blogged about my running activities was after DebConf 13

in Switzerland, back in August.

At that time, I just completed two great moutain races in one month

(Mont-Blanc Marathon, then EDF Cenis Tour, one being 42km and 2500m

positive climb and the other one being 50km and 2700m). EDF Cenis Tour

was my best result overall in a trail race, being ranked 40th out of

more than 300 runners and 3rd in my age category (men 50-59).

So, in late August, I was preparing for my "autumn challenge", a

succession of 3 long distance races in a row:

This is my monthly summary of my Debian related activities. If you re among the people who

This is my monthly summary of my Debian related activities. If you re among the people who  Dpkg

Like last month, I did almost nothing concerning dpkg. This will probably change in June now that the book is out

The only thing worth noting is that I have helped Carey Underwood who was trying to diagnose why btrfs was performing so badly when unpacking Debian packages (compared to ext4). Apparently this already resulted in some btrfs improvements.

But not as much as what could be hoped. The sync_file_range() calls that dpkg are doing only force the writeback of the underlying data and not of the meta-data. So the numerous fsync() that follow still create many journal transactions that would be better handled as one big transaction. As a proof of this, replacing the fsync() with a sync() brings the performance on par with ext4.

(Beware this is my own recollection of the discussion, while it should be close to the truth, it s probably not 100% accurate when speaking of the brtfs behaviour)

Packaging

I uploaded new versions of smarty-gettext and smarty-validate because they were uninstallable after the removal of smarty. The whole history of smarty in Debian/Ubuntu has been a big FAIL since the start.

Once upon a time, there was a

Dpkg

Like last month, I did almost nothing concerning dpkg. This will probably change in June now that the book is out

The only thing worth noting is that I have helped Carey Underwood who was trying to diagnose why btrfs was performing so badly when unpacking Debian packages (compared to ext4). Apparently this already resulted in some btrfs improvements.

But not as much as what could be hoped. The sync_file_range() calls that dpkg are doing only force the writeback of the underlying data and not of the meta-data. So the numerous fsync() that follow still create many journal transactions that would be better handled as one big transaction. As a proof of this, replacing the fsync() with a sync() brings the performance on par with ext4.

(Beware this is my own recollection of the discussion, while it should be close to the truth, it s probably not 100% accurate when speaking of the brtfs behaviour)

Packaging

I uploaded new versions of smarty-gettext and smarty-validate because they were uninstallable after the removal of smarty. The whole history of smarty in Debian/Ubuntu has been a big FAIL since the start.

Once upon a time, there was a  thanks to lucas' last

thanks to lucas' last  This blog is

This blog is  This blog post isn t only directed to ThinkPad owners as most notebook Linux users with Intel Core Duo 1/2 and i3/i5/i7 processors have been affected by this bug if not all. And yes, this problem is present on latest Debian Unstable and Ubuntu 11.10.

Prelude

I m owner of

This blog post isn t only directed to ThinkPad owners as most notebook Linux users with Intel Core Duo 1/2 and i3/i5/i7 processors have been affected by this bug if not all. And yes, this problem is present on latest Debian Unstable and Ubuntu 11.10.

Prelude

I m owner of  Last weekend Dennis M. Ritchie, creator of the C Programming Language, the UNIX Operating System, The Plan 9 Operating System and many other key contributions to computing, passed away due an extended illness. I learned about it yesterday

Last weekend Dennis M. Ritchie, creator of the C Programming Language, the UNIX Operating System, The Plan 9 Operating System and many other key contributions to computing, passed away due an extended illness. I learned about it yesterday